In the annals of enterprise, there exists a concept known as a “unified data analytics platform”. One place to store, organize, train on, and query all your data.

For the past 10 years, Databricks has been building this software. But there is another company with a long history of serving enterprises and building data products which always had the potential to provide some of these workflows for customers.

With the release of Fabric at their 2023 Build conference, Microsoft is taking steps to give enterprises an all-in-one platform for data analytics. Could their latest effort be a real threat to Databricks? We dissect the pricing and workflows of the two offerings.

Unified Data Analytics Platform

If you are a data engineering professional, feel free to skip this section. But as a point of reference to talk about cloud costs, there are traditionally three types of data systems in a business in order from most structured to least structured: databases, data warehouses, and data lakes. Databricks and Fabric target a fusion between data warehouses and data lakes, where analytical queries like SELECT SUM(amount) FROM customers can be run on semi-structured data such as CSVs or JSON.

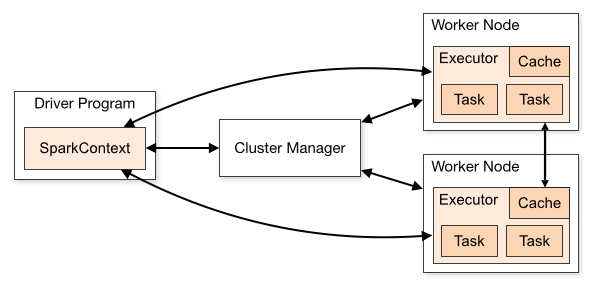

Basic execution flow of Apache Spark. Source.

Apache Spark, an open-source data processing framework that handles job scheduling, retries, and results collection, is at the core of executing the queries and jobs that users run on data in the “lakehouse”. Spark creates an execution graph of the query/job to be run and executes it across a distributed cluster of compute nodes, using data from an object store such as Azure Blob Storage.

Running SQL queries on huge amounts of semi-structured data about the simplest type of job that Databricks or Fabric would handle. It’s also possible to run Python, Java, and other languages as well as train machine learning models, do stream processing, and run data science notebooks that use Spark in the background to execute jobs.

Databricks vs Fabric Architecture

Under the hood, Databricks and Fabric are both using Apache Spark to handle compute workloads, so their architecture is probably not that different. However, what users see is very different. Fabric combines compute, data transfer, storage volume, and other costs into “Capacity Units” which is a single SKU that is charged for workloads. Databricks allows customers to configure which instance types and other infrastructure are used and deployed within the customer’s own cloud infrastructure and then charges DBUs as a sort of management fee for managing job execution.

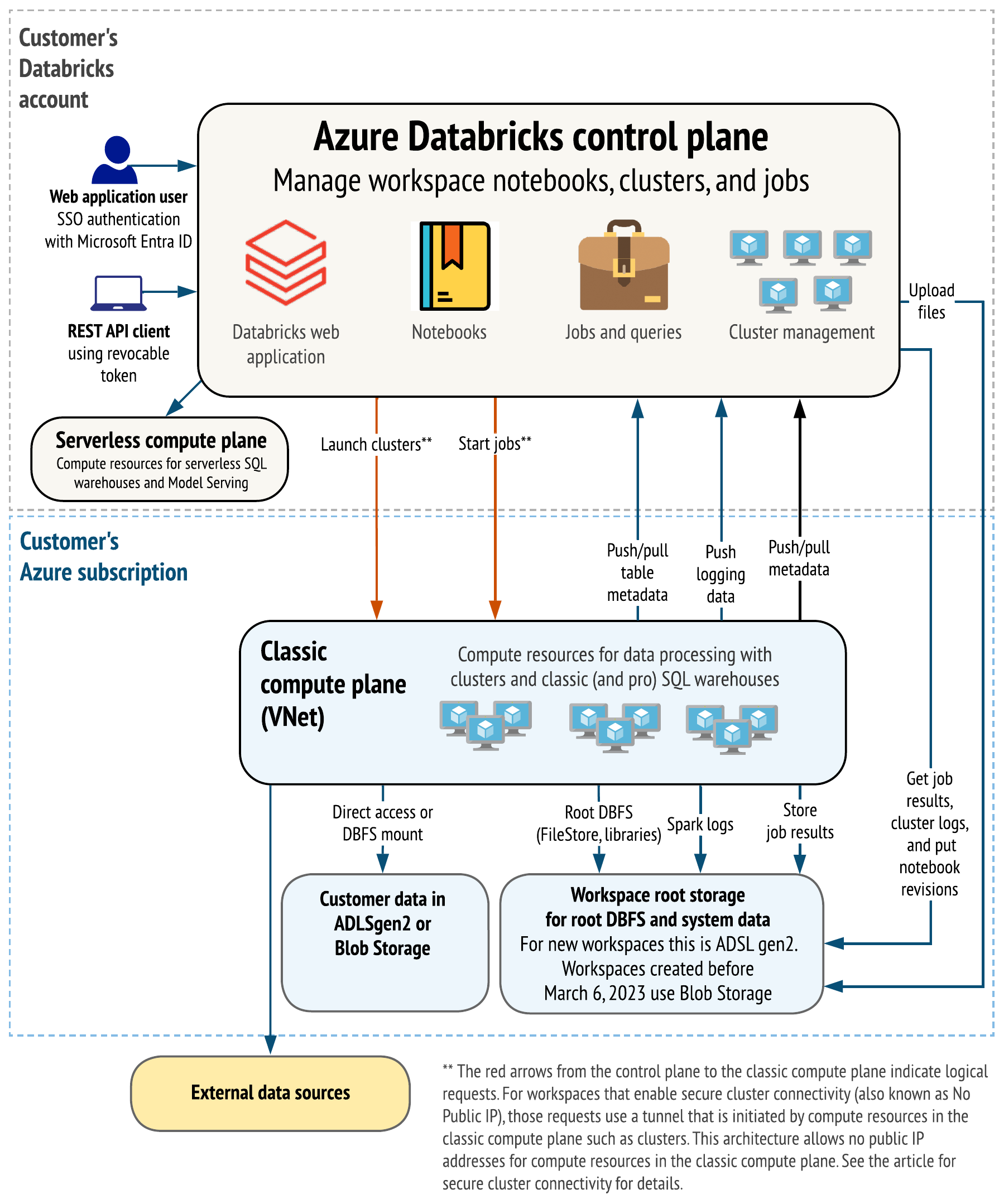

Databricks architecture on Azure, where Databricks manages infrastructure within the customers' account. Source.

Regarding storage, Fabric uses “OneLake”, Azure’s lakehouse solution, which is built on the same “Delta Lake” technology that Databricks employs. Both lakehouses are physically stored on Azure Data Lake Storage Gen2 (ADLS Gen2) or Amazon S3. Remember, the point of the datalake is to store one semi-structured copy of the data that is used by all analytics processes. In Fabric’s case, they face the issue that existing Power BI and Synapse users may have their data in many separate buckets. To merge these silos into OneLake, Fabric has a feature called OneLake shortcuts where users can point their data at OneLake to be able to access it through the Fabric runtime.

Databricks vs Fabric Feature Comparison

The simplest way to think about Fabric is that it extends BI and data warehousing capabilities in Azure in a form that is more similar to “how Databricks does it”. Fabric has more limited machine learning capabilities and fewer real-time streaming and ETL features as compared to Databricks.

| Feature | Databricks | Fabric |

|---|---|---|

| SQL | X | X |

| Python | X | X |

| Data Science Notebooks | X | X |

| Managed Apache Spark | X | X |

| Data Engineering | X | X |

| Serverless SQL | X | X |

| MLFlow | X | X |

| Delta Live Tables | X | |

| Model Serving | X |

Feature and workload comparison for Databricks and Fabric.

Delta Lake Live Tables

Delta Tables are an extension of the Parquet file format which adds some ACID guarantees. Delta Live Tables take things even further and add a streaming layer on top of Delta Tables for pipeline-esque functionality. In practice, you can write SQL or Python which continuously runs on new data as it arrives in the lakehouse. Currently, there is no similar functionality in Fabric.

MLFlow

MLFlow is an open-source project for managing the full lifecycle of machine learning development, including training, deployment, retraining, and model development. MLFlow is deeply integrated into Databricks. Fabric provides “MLFlow endpoints” that Microsoft calls Experiments.

Databricks vs Fabric UI and UX

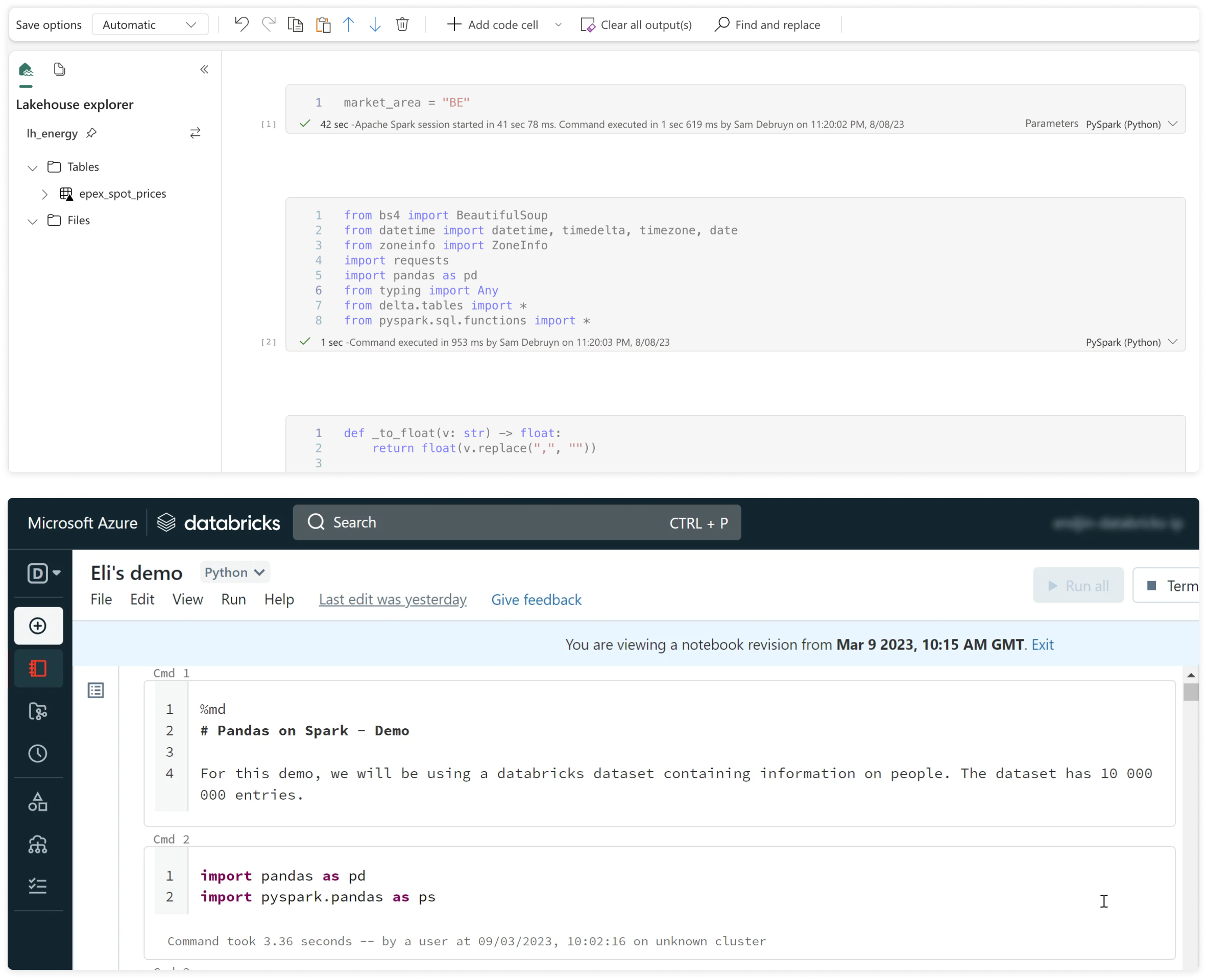

Notebook UI in Fabric vs Databricks. Source: Sam Debruyn and Endjin.

Both Databricks and Fabric users will see a central console where they can manage their workspaces, which are collections of data tools. In Fabric, there are what we would describe as “native Fabric” tools such as Notebooks and Spark Jobs and “product experiences” which are chiefly Power BI and Synapse that are launched within the Fabric console. As you use Synapse or PowerBI in Fabric you may run into a few gotchas where certain queries or flows are not yet supported. Endjin has a good explainer on Synapse vs Fabric.

Databricks vs Fabric Pricing Comparison

It’s very difficult to directly compare Databricks and Fabric pricing, but we can dissect their pricing models. There are also some benchmarks that are now available where data engineers such as Mimoune Djouallah have published early pricing for Fabric.

Databricks Pricing

Databricks pricing (or really total cost of ownership) has 4 dimensions: workload, platform tier, cloud provider, and instance type. The workload is the type of data job you are running, including:

- Apache Spark (Workflows)

- Streaming data (Delta Live Tables)

- SQL, BI, analytics (Databricks SQL)

- Notebooks and AI (Data Science & ML)

The platform tiers - Standard (expiring October 1, 2025), Premium, and Enterprise - dictate what data catalog, lineage, governance, and security features are available. For example, Premium comes with audit logs while Standard does not.

| Service (Premium tier) | Cost (per DBU on Azure) |

|---|---|

| Mosaic AI Model Serving | $0.07 |

| Workflows (Photon) | $0.15 |

| Delta Live Tables | $0.20 |

| Databricks SQL | $0.22 |

| Workflows (Serverless) | $0.35 |

| Delta Live Tables (Serverless) | $0.35 |

| Delta Live Tables (Pro) | $0.25 |

| Delta Live Tables (Advanced) | $0.36 |

| Databricks SQL (Pro) | $0.55 |

| Data Science | $0.55 |

| Databricks SQL (Serverless) | $0.70 |

| Data Science (Serverless) | $0.75 |

Pricing for Databricks in AWS US East (N Virginia).

All three major cloud providers are supported but costs and feature availability vary. Azure is more expensive than AWS and GCP, while GCP and Azure do not have Photon - a “native vectorized [parallel processing] engine entirely written in C++”, which can speed up and reduce costs for certain jobs.

On each cloud provider, you’ll need to select an instance type to run jobs which is an additional cost outside of Databricks as Databricks workloads run within customers’ own cloud environments. You’ll also want to tack on attached disks, cloud storage, and public IP addresses to get a true total cost of ownership for Databricks.

Microsoft Fabric Pricing

Fabric’s pricing is theoretically simpler. Although by giving customers only one compute dimension to price against, you might say that Microsoft has made cost estimation for Fabrics harder. In fact, they explicitly say:

Currently, there is no formula that can provide an easy upfront estimate of the capacity size you’ll need. The best way to size the capacity is to put it into use and measure the load.

With the pricing table below (Power BI information added by Radacad), Fabric users get an all-in cost for the workflows Fabric supports:

- Apache Spark

- Streaming data (Synapse Real Time Analytics)

- SQL, BI, analytics (Power BI and Synapse Data Warehousing)

- Notebooks and AI (Synapse Data Science)

| SKU | Power BI SKU | CU | Spark VCores | Power BI VCores | Hourly Cost |

|---|---|---|---|---|---|

| F2 | - | 2 | 4 | 0.25 | $0.36 |

| F4 | - | 4 | 8 | 0.5 | $0.72 |

| F8 | EM/A1 | 8 | 16 | 1 | $1.44 |

| F16 | EM2/A2 | 16 | 32 | 2 | $2.88 |

| F32 | EM3/A3 | 32 | 64 | 4 | $5.76 |

| F64 | P1/A4 | 64 | 128 | 8 | $11.52 |

| F128 | P2/A5 | 128 | 256 | 16 | $23.04 |

| F256 | P3/A6 | 256 | 512 | 32 | $46.08 |

| F512 | P4/A7 | 512 | 1024 | 64 | $92.16 |

| F1024 | P5/A8 | 1024 | 2048 | 128 | $184.32 |

| F2048 | - | 2048 | 4096 | 256 | $368.64 |

Pricing for Microsoft Fabric in AWS US East (N Virginia).

Note that the use of OneLake - the storage layer that connects data sources within the Fabric platform - has a cost of $0.023 per GB per month. Databricks also has an associated storage cost which is simply the cost of storing Databricks data on Azure Blob Storage or S3.

Comparing Managed Apache Spark

Without a common pricing unit (such as CPUs) to compare Databricks and Fabric, we can look at how much Databricks usage you get per Fabric SKU and compare from there. The lowest Fabric SKU which equates to 1 Power BI V-Cores is F8 at $1.44 per hour with 8 capacity units. A similar Databricks cost for a Compute Job on an E8ads v5 would be $1.35 per hour. Beyond that, because estimating the cost of Apache Spark jobs ahead of time is challenging, the best we can do is think through how each service charges for compute.

Fundamentally, Databricks provides a management layer that executes jobs within your infrastructure. For this, they charge the fees outlined above. Databricks has not said publicly what ingredients go into a DBU, other than it is a “normalized unit of processing power” that “includes the compute resources used and the amount of data processed”. For compute jobs, we can venture to say that the number of spark executor cores is certainly a factor.

Microsoft says this regarding Fabric SKUs:

One way to think of it is that two Spark VCores (a unit of computing power for Spark) equals one capacity unit. For example, a Fabric capacity SKU F64 has 64 capacity units, which is equivalent to 128 Spark VCores.

It’s possible that Spark VCores refer to threads but they seem to be able to handle less work than a thread, where it’s only possible to run more than 1 concurrent job once there are 16 VCores available. Microsoft says:

The Spark VCores associated with the capacity is shared among all the Spark-based items like notebooks, Spark job definitions, and the lakehouse created in these workspaces.

In our view, the Fabric pricing model hides a lot of complexity underneath a seemingly simple tiered consumption-based model. With Databricks, the charging model is clear: choose an instance, choose a storage system, select a service tier, and select a job type. From there it’s easier to tune jobs as well. Fabric’s “shared capacity” where you have Notebook users competing with people running Spark jobs competing with ETL that is hitting the lakehouse seems tougher to manage.

Conclusion: Enterprise Data Analytics

Credit where credit is due: Microsoft has made huge strides in unifying Synapse (data warehouse and notebooks), Power BI (business intelligence), Spark, and a dash of ML capabilities into one platform that simplifies the management of all these workloads. But digging deeper, we can appreciate how Databricks clearly segments out different workflows, compute, and storage. Our guess is that Fabric’s giant leap forward for Microsoft may push Databricks to move faster with supporting standard lakehouse storage formats like Iceberg and launch more BI-focused products as they are already doing. Within Azure, more organizations will be evaluating if they can just upgrade all their existing Microsoft data products “into” Fabric versus moving those workloads to Azure Databricks. But for technically sophisticated data teams who are introducing AI workloads and aggressively tuning their data engineering stacks, Databricks remains king.