Cloud Cost Report

The Q4 2024 Cloud Cost Report analyzes real-world data to quantify how cloud spending patterns are changing.

Fresh off the quarterly earnings for Microsoft, Google, and Amazon, we are releasing the Q4 2024 Cloud Cost Report, an analysis of cloud usage based on anonymized Vantage customer usage. Vantage is a cloud cost visibility and optimization platform, with a unique view into industry trends, thanks to tens of thousands of connected infrastructure accounts across 19 cloud providers. To discuss this report in more detail, join our growing Slack Community of over 1,000 engineering leaders, FinOps professionals, and CFOs. View past reports here.

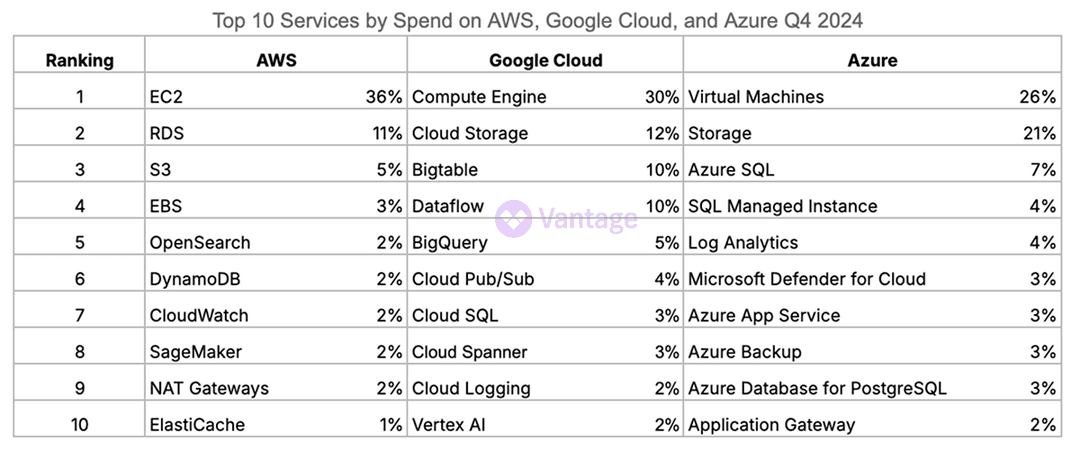

Top Services by Spend Across Clouds

Compute, storage, and databases remain the pillars of the three main cloud providers—AWS, Google Cloud, and Azure. However, the impact of AI is becoming more apparent with Sagemaker at number 8 for AWS spend and VertexAI at number 10 for Google Cloud spend.

Managing AI spend is increasing in priority for cloud users after an era of experimentation. One example of this is Amazon EC2 users committing to Reservations and Savings Plans to cover their GPU instances.

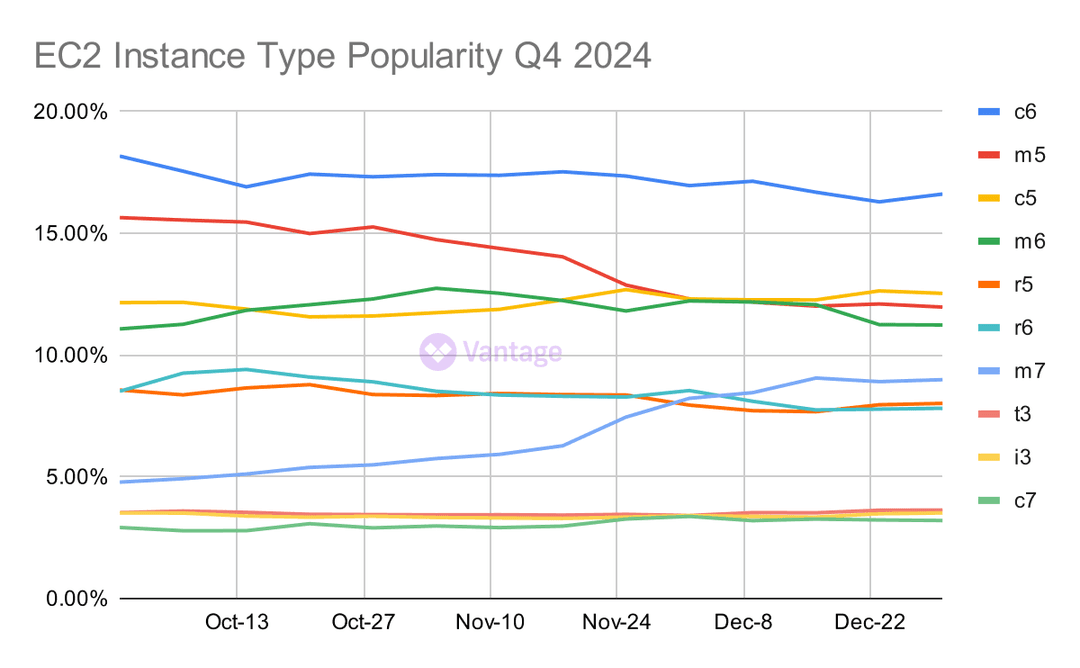

EC2 Instance Movement With the M Family

EC2 users continue to gradually modernize their compute infrastructure. As predicted last quarter, m6 surpassed m5 usage at one point, as users opted for more efficient and cost-effective instances.

m7 showed remarkable growth as well last quarter, nearly doubling in adoption and ending at less than 10% away from m5's spend.

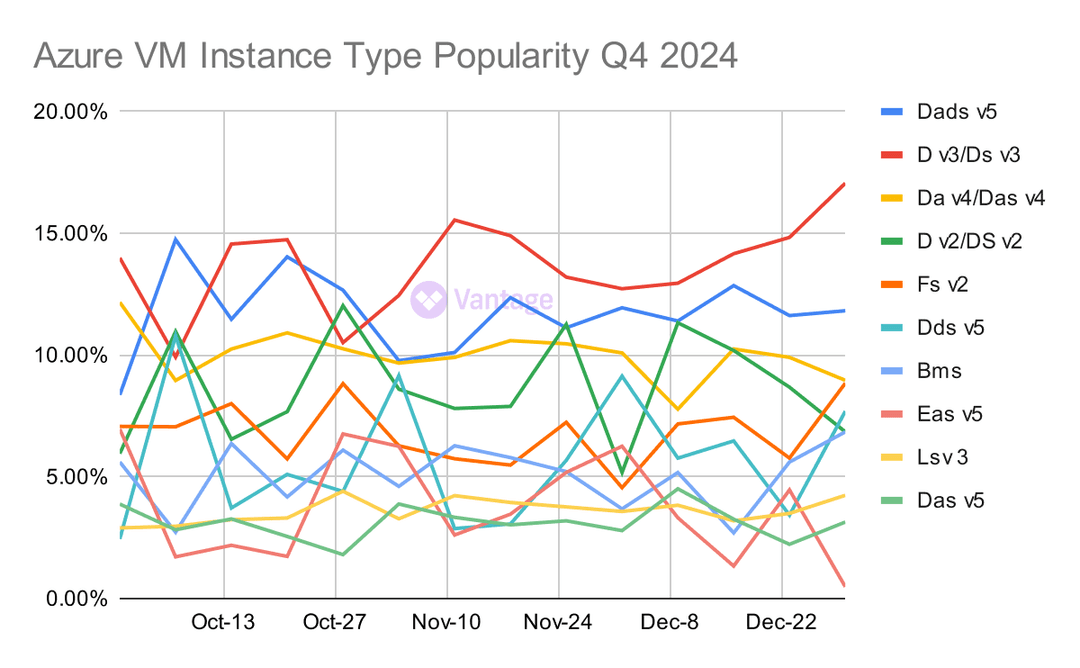

The Fluctuation of Azure VMs

Azure VM usage shows significant volatility in Q4 2024, with notably more distributed usage across instance types compared to earlier in the year. The general-purpose D family remains the most popular choice due to its performance balance and range of use cases

The frequent shifts between instance types can be attributed to Azure users having more flexibility in changing compute resources, due to them favoring the pay as you go payment plan (see section).

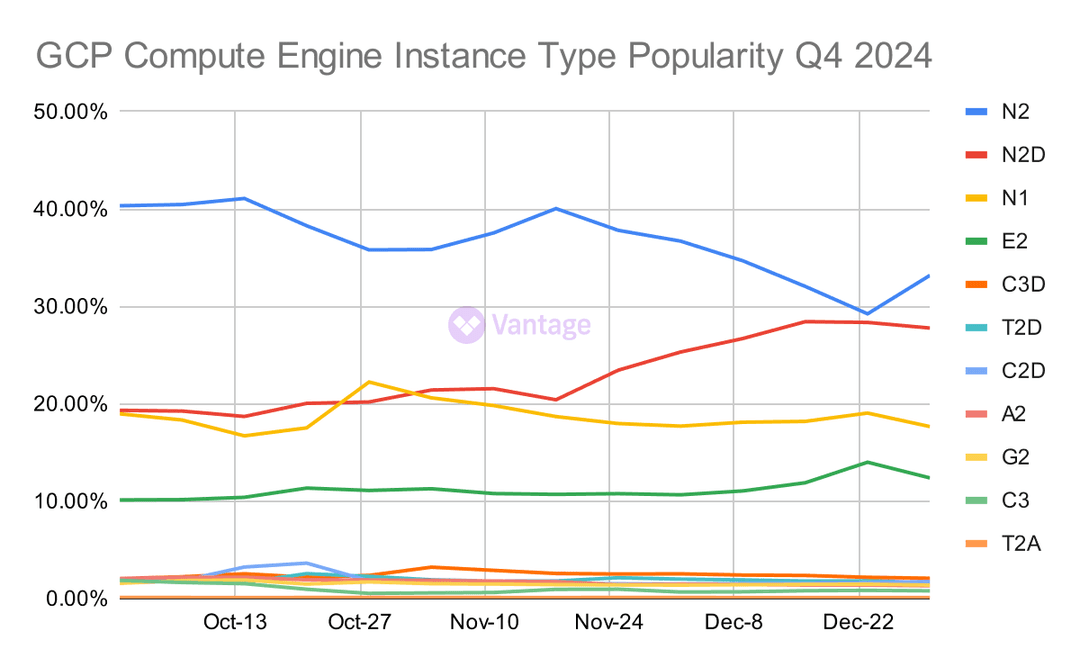

The General Purpose N-Series Leads GCP Compute

Google Cloud's N-series instances continue to dominate compute usage, with N2 and N2D together accounting for over 50% of instance usage. This, however, is down from earlier in the year when N2 and N2D accounted for about 80% of the spend.

Spend is more distributed now, with N1 and E2 steadily making up ~20% and ~10% respectively. This indicates users making more of an effort to choose instance types specific to their workloads.

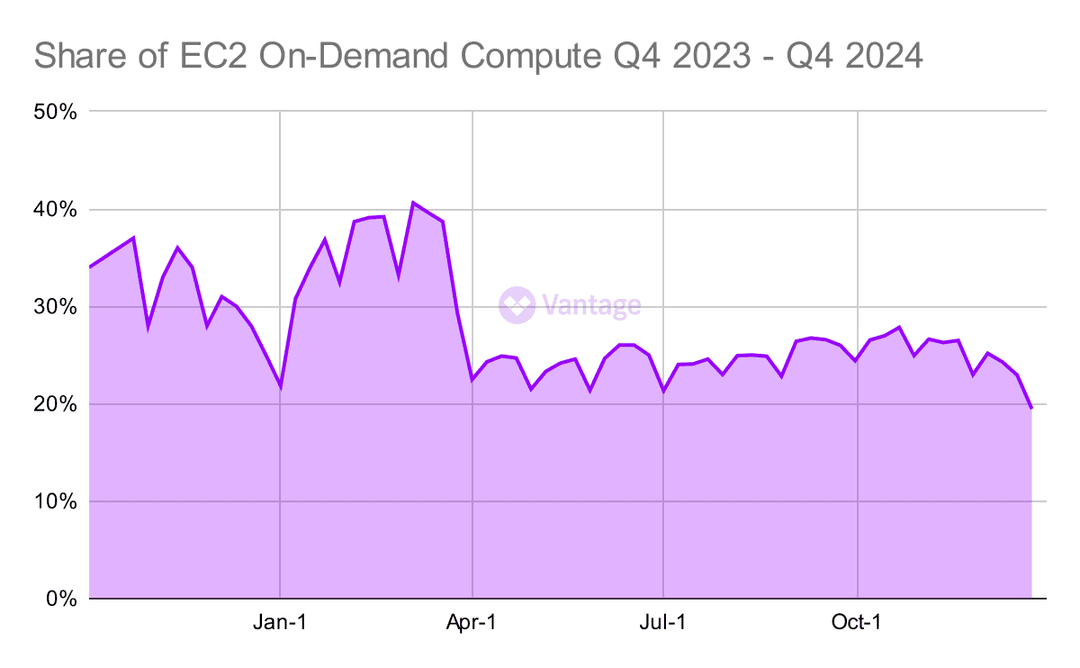

EC2 On-Demand Compute Still Low

EC2 On-Demand spend remains consistently low at around 25%, down significantly from peaks of 40% earlier in the year. This indicates that cost-conscious users continue to favor Reservations and Savings Plans in exchange for discounted rates.

This stability in committed use aligns with a broader industry focus on cost optimization, as it is a low-effort way to achieve substantial cost savings.

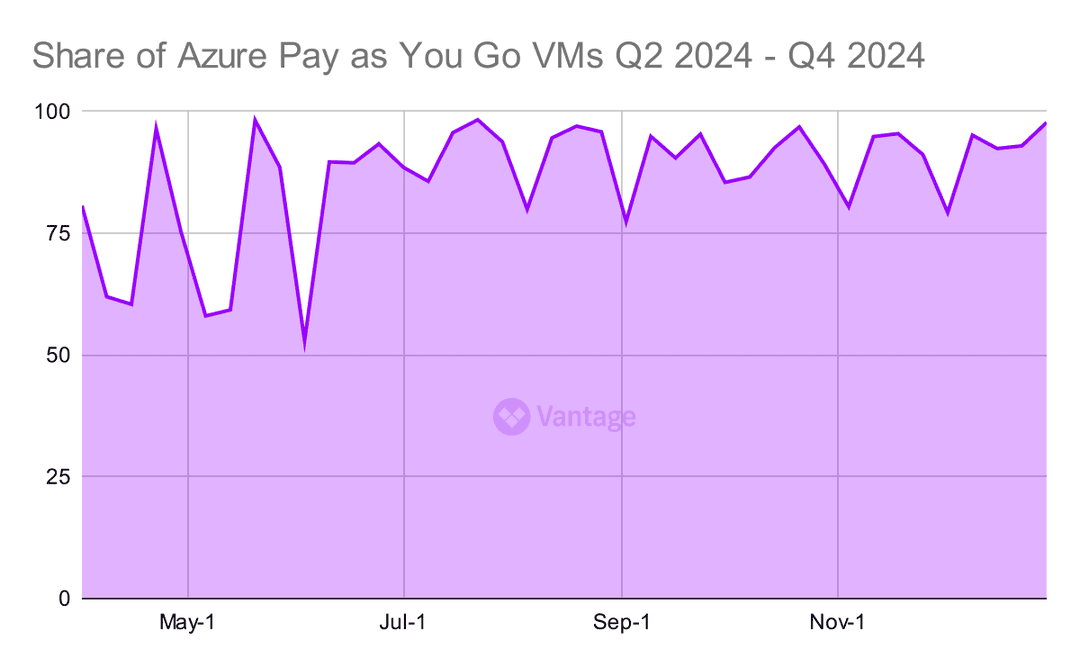

Azure Pay as You Go VMs are the Majority

Azure pay as you go VMs are at the opposite end of the spectrum from EC2 On-Demand. Most Azure users opt for pay as you go plans instead of committing to Reserved VM Instances or savings plans for compute.

For non-production or unpredictable workloads, pay as you go can be more cost-effective since Reserved VM Instances are billed for all provisioned hours, regardless of usage. However, for many workloads, Reserved VM Instances yield huge cost savings, and Azure currently offers flexible cancellation options, reducing the risks of long-term commitments. Savings plans for compute provide another path to savings, with even more flexibility—allowing users to switch VM sizes or even shift spending between VMs, containers, and other eligible services.

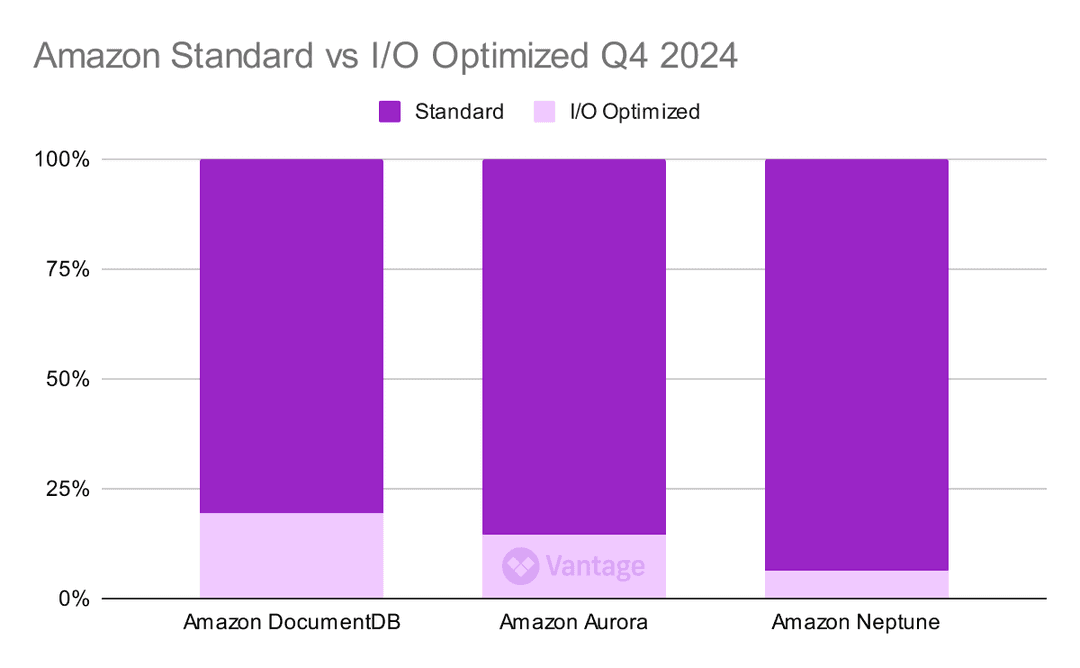

Amazon Standard vs I/O Optimized Databases

Amazon DocumentDB, Aurora, and Neptune all offer two payment options for their databases: Standard or I/O Optimized. I/O Optimized is recommended for cases where I/O charges are more than 25% of spend. It works by not charging for I/O requests in exchange for increased compute and storage pricing.

For DocumentDB, Aurora, and Neptune, users are predominantly choosing the Standard payment plan, with I/O Optimized adoption remaining relatively low across all three services. However, many production workloads have I/O requests that exceed 25% of spend. In such cases, switching to I/O Optimized could result in meaningful cost savings.

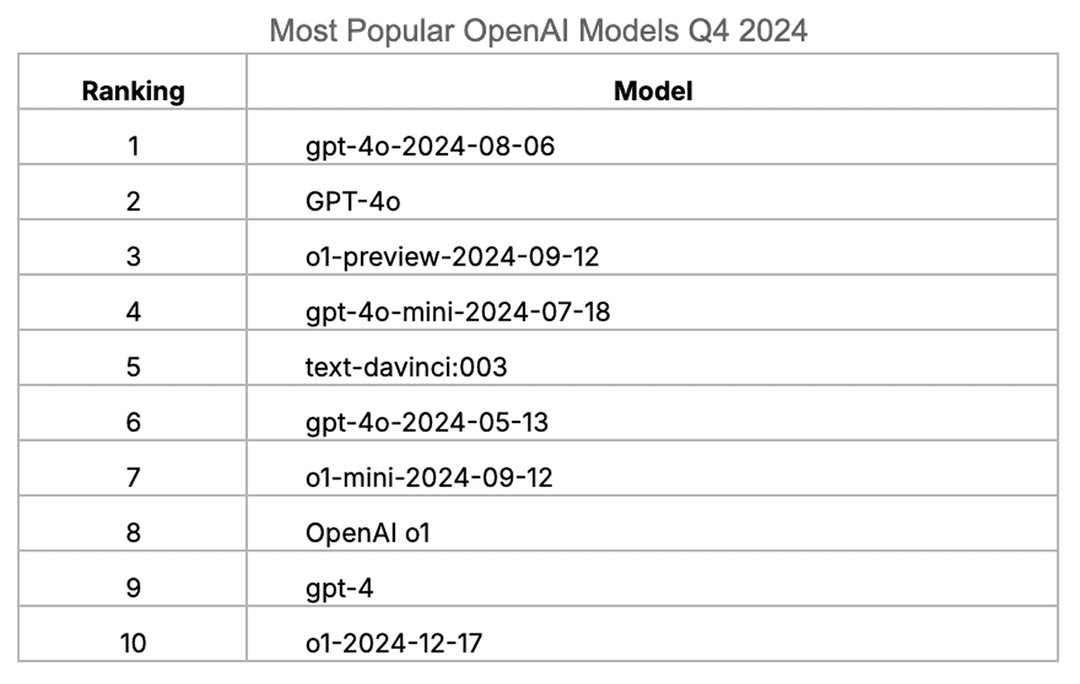

OpenAI Models by Spend

Last quarter, OpenAI made their cost API public and we re-launched our integration with OpenAI to help users monitor and optimize their AI costs. Now, for the first time, we can include OpenAI costs in our quarterly reports.

Looking at the most popular models by spend, the newly released GPT-4o and OpenAI o1 are the most popular. The presence of recent release dates indicates a different pattern from traditional cloud services like Amazon EC2, where users gradually upgrade to newer generations. Instead, AI users are actively experimenting with and adopting the latest models, quickly gravitating toward the newest and most powerful options available.