Google Gemini vs Azure OpenAI GPT: Pricing Considerations

With the introduction of Gemini and its competitive pricing structure and groundbreaking 1M token context window, Google is emerging as a strong contender in the AI landscape.

Updated June 26, 2024 to reflect recent updates.

For most of the early 21st century, Google was just about unanimously considered the king of AI. However, with OpenAI’s release of ChatGPT to the public at the end of 2022 and the whirlwind of AI innovation that followed, Google has taken the backseat. Due to the impressive 1M context window and competitive pricing, Gemini could be their chance to take the lead.

Google Gemini

Google’s generative AI offerings have been hard to follow. In December 2023, not even one year after Bard (Google's former and short-lived flagship LLM) was announced, Gemini was announced as its “largest and most capable model” and since then, there have been rapid and significant developments.

February 2024, in particular, was a busy month for updates and rebranding efforts. The biggest of which was the rebranding of Bard to Gemini. Gemini builds upon and surpasses Bard in terms of functionalities and scalability. February also saw the consolidation of Duet AI for Workspace, the AI tools used to integrate with Google productivity tools (i.e. Gmail, Docs, and Sheets), and Duet AI for Developers into the Gemini framework.

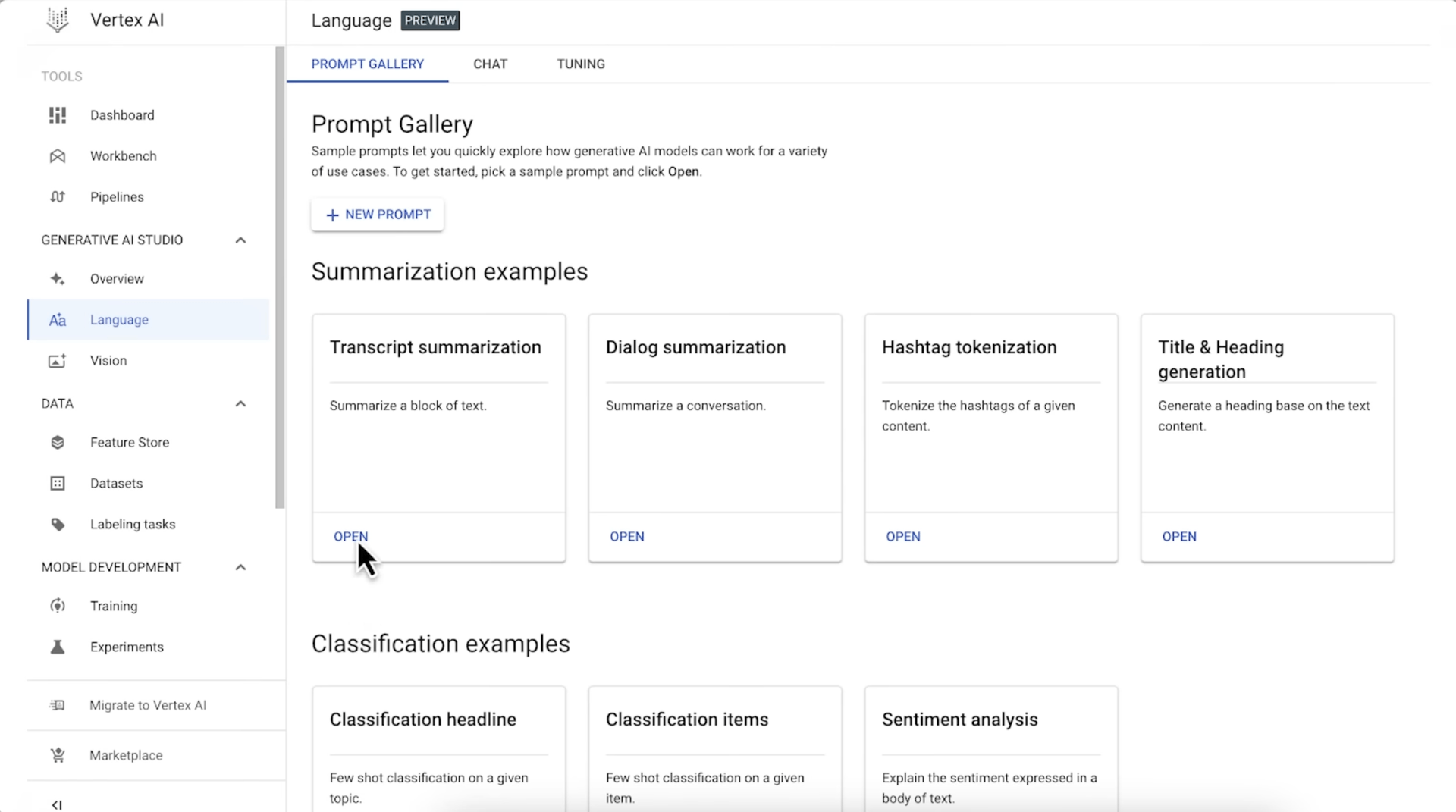

Gemini is accessible via Google AI Studio or Google Cloud Vertex AI.

Google Gemini Models

Table of supported Google Gemini models (as of 6/26/2024). Note-Gemini 1.0 Ultra and Gemini 1.0 Ultra Vision are GA with allow list.

Azure OpenAI GPT

OpenAI almost needs no introduction. Since the release of ChatGPT to the public in November 2022, OpenAI GPT models have seen widespread adoption across various sectors. Its impact on the AI landscape has been substantial, catalyzing advances in research and development and fostering increased awareness, with many people referring to AI chatbots and ChatGPT synonymously.

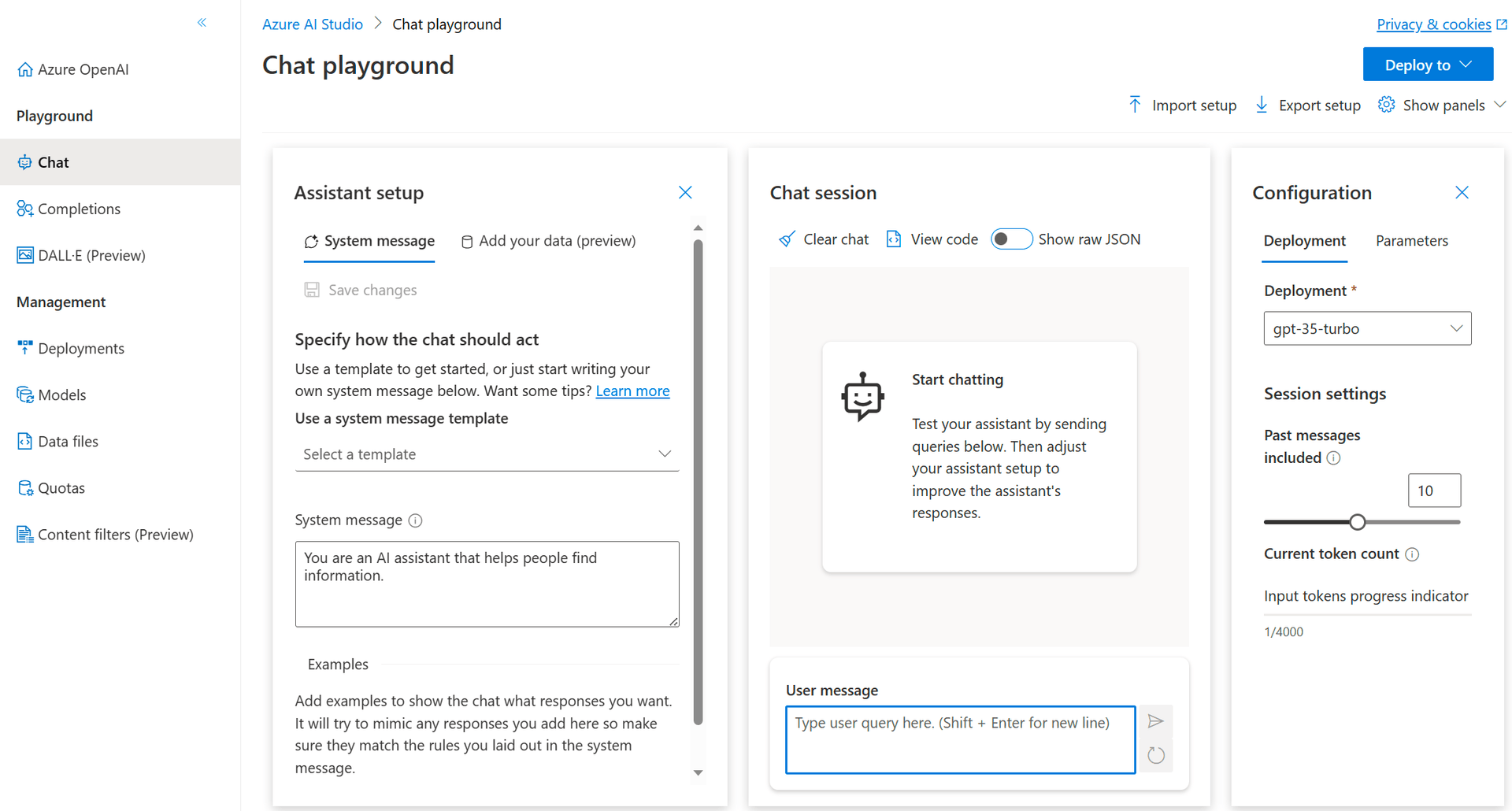

Azure OpenAI is a partnership between Azure and OpenAI that enables Azure users to use OpenAI (including OpenAI GPT models) via an API, Python SDK, or their web-based interface, while authenticated with their Azure cloud credentials. Azure OpenAI distinguishes itself from OpenAI by offering co-developed APIs, enhanced security, and private networking. Throughout this article, "GPT models" refers exclusively to Azure OpenAI GPT models for the sake of brevity.

Azure OpenAI GPT Models

Table of supported Azure OpenAI GPT models (as of 6/26/2024).

Google Gemini vs Azure OpenAI GPT Models Functionality

Gemini has been met with skepticism from users, due in part to Bard falling short of expectations. Gemini 1.0 has received mixed reviews from users, with some saying it falls short of GPT-4 and some saying they prefer it over GPT-4. However, the highly anticipated Gemini 1.5 and its massive context window are receiving glowing accolades from early adopters, with predictions that it is poised to surpass GPT-4. Aside from comparing benchmarks of the two, we can compare them at a service and model level.

Google AI vs Azure AI Service Comparison

As far as service offerings, Google and Azure provide varying levels of support.

- Documentation/Community: Based on anecdotal assessments, the documentation for both services is abysmal. Users of both are complaining about missing information and instructions that are hard to follow. Some users are even complaining that Google’s documentation is incorrect. This is likely because both services and the models within the services are so new and constantly changing.

- Accessibility: Both services can be accessed through APIs and cloud-based studios. Additionally, Azure OpenAI offers an SDK for developers. Both studios provide user-friendly interfaces, which can be particularly helpful for users with limited technical experience.

-

Fine-Tuning Models: Supervised fine-tuning for Gemini 1.0 Pro is in preview. With Azure, you can fine-tune GPT-3.5 Turbo.

-

Context Caching: Certain Gemini models support context caching for a separate charge, enabling you to cache repetitive input/output results easily and possibly save money.

-

Data Use: Neither Google nor Azure uses customer data to train their AI models.

Gemini vs GPT Model Comparison

As far as the actual models go, there are several quantitative factors to consider.

- Max Tokens: The max token amount varies per model, but both Gemini and GPT models offer similar options, ranging from 8k to 128k tokens. However, Gemini 1.5 takes the overwhelming lead with an unprecedented 1M token context window, providing users with an exceptional capacity for processing large amounts of data. To put it into perspective, 1M tokens correspond to an immense amount of data, equating to approximately "1 hour of video, 11 hours of audio, codebases with over 30,000 lines of code, or over 700,000 words."

- Supported Regions: Availability may be model and feature-specific. Check Google and Azure to see if your region is supported.

- Supported Languages: Gemini can be used in over 40 different languages, all of which are clearly listed here. It is less clear which languages are supported for the GPT models. OpenAI has stated you can use the GPT models with multiple languages, however, they are optimized for English.

- Training Data Date: Gemini models have a knowledge cutoff of November 2023. The training data date is model-specific for GPT models, GPT-3.5 Turbo and GPT-4 were trained up to September 2021 while the GPT-4 Turbo versions were trained until April 2023.

Google Gemini Models Pricing

Pay-As-You-Go

Charges vary for different model types and input types.

Google Gemini model pricing table (as of 6/26/2024).

Azure OpenAI GPT Models Pricing

Charges for GPT models are fairly simple. It is a pay-as-you-go, with no commitment. There are additional customization charges. Price varies per region and is shown for the US East region.

Pay-As-You-Go

Charges vary for different model types and context.

OpenAI GPT model pricing table (as of 6/26/2024).

Fine-Tuning

Fine-tuning charges are based on training tokens and hosting time.

OpenAI GPT fine-tuning pricing table (as of 6/26/2024).

Pricing Comparison Gemini vs GPT Models

Based on benchmarks, user feedback, and use cases the most comparable models are:

Gemini 1.5 Pro vs GPT 4o Pricing

Gemini 1.5 Pro is the cost-effective choice compared to GPT-4o. Both the input and output tokens are priced 30% lower than GPT-4o's.

Gemini 1.0 Pro vs GPT-4 Pricing

Gemini 1.0 Pro is significantly less expensive than GPT-4. Its input tokens are priced 99.17% lower than GPT-4's and its output tokens are 98.75% lower. This dramatic price difference makes Gemini 1.0 Pro a good option for users looking to optimize costs.

Conclusion

The GPT models are still widely considered to be the most powerful AI models available on the market. However, based on the extraordinarily competitive pricing of Gemini, as well as the 1M context window with Gemini 1.5, Google has positioned itself as a serious competitor in the realm of AI.

Sign up for a free trial.

Get started with tracking your cloud costs.